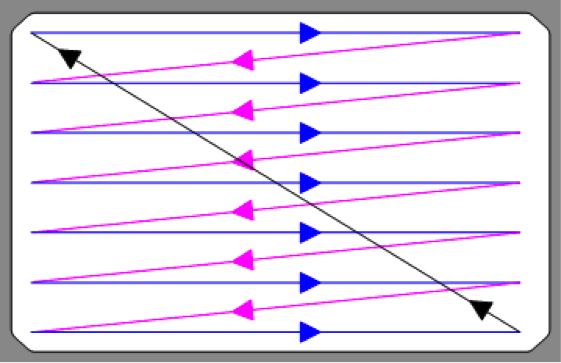

I’m sure that everyone reading this will be familiar with the fact that in a television system, the camera scans an image using a scan pattern known as a raster. (A quick Google search for “raster scan” readily returns images such as the following to illustrate my point):

The image is scanned as a succession of lines from the top left pixel to the bottom right. The scanning process is repeated at a fixed rate typically 50 or 60 times per second giving us the notion of “frame rate”. This much has been true since the very first 405-line TV transmissions of the 1930’s and 1940’s.

Even in the modern era of digital television, the basic concept of scanning a picture in a raster from top left to bottom right at a fixed frame-rate remains. Modern professional television equipment is typically connected together using SDI (Serial Digital Interface) connections to carry the video (see Adam’s excellent article “Going the Distance with SDI” https://ripcorddesigns.com/blogs/broadcast-tech-talk/117737221-going-the-distance-with-sdi ). But even here, the video is still carried as a succession of pixels in strict raster order from top left to bottom right, repeating ad infinitum at the appropriate frame-rate. (MPEG and H.264 video compression have muddied the waters a little by dividing the picture into 16x16 or 8x8 blocks of pixels known as macroblocks, but even these are transmitted in the usual top-left to bottom-right raster-scan order).

If you have ever stopped to consider why we use this form of raster to scan the image, then you probably realise that it came about because driving the scan coils of an early CRT-based television set so that the electron beam traced out a raster was about the most complicated scan pattern that could be achieved with the electronics of the time.

My first job as a new graduate was with the BBC working at their Research and Development Department. There I was lucky enough to meet and work with a brilliant engineer called John Drewery who had hit on the idea of scanning an image not with a traditional raster but with a fractal pattern known as a Hilbert curve or Peano scan which looks like this:

It turns out the Peano scan has a number of advantages over a traditional raster. The main advantage is the fact that horizontal and vertical fine detail in an image generate the same frequency components in a video signal that has been scanned using a Peano scan. What this means is that any filtering of the video signal that occurs along the transmission path (say) causes an equal degredation to both horizontal and vertical fine detail in the picture (as opposed to a traditional raster-scanned picture where the horizontal fine detail is much more greatly impacted by any filtering or bandwidth restriction of the video signal than is the vertical fine detail).

BBC R&D actually put together a working TV system using this fractal scan pattern! I recall an oscilloscope was pressed into service as the display monitor since it was possible to drive the X and Y deflection independently to trace out the Peano scan. Subsequently we extended the work into 3-dimensions and jointly filed UK patent GB2253111A (see http://patent.ipexl.com/GB/2253111-a.html )

I’m not for one moment proposing the broadcast world adopts the Peano scan in favour of the traditional raster. However, I have recently found myself musing as to why we need the concept of a scan pattern and a frame-rate at all. After all, the human visual system does not employ such concepts. I therefore wonder if the ideal way of conveying a picture from a TV camera to a TV display is not to scan it in raster form and convey the brightness and colour of each pixel in strict sequential fashion over a link but to have a massively parallel connection where each pixel on the TV camera sensor is connected via (effectively) a dedicated connection to the corresponding pixel on the TV display. The moment a camera pixel changes brightness and / or colour the change is immediately conveyed to the corresponding pixel on the display. Clearly we have now got rid of all thought of scanning and frame-rate with this system. In some ways, it would be like watching an image through a pane of glass or telescope where any change in the image is immediately conveyed to the corresponding cell of our retina.

I confess that achieving the required massively parallel connection between each pixel of the TV camera and each pixel of the TV display may be something of a challenge (especially at 4K UHD resolution and especially if the desire was to broadcast such a signal to the home)! However, with a little inventiveness, I think such a scheme could be devised. After all, COFDM-based TV broadcasts use a large number of independent carriers to convey data and this is a form of massively parallel connection. Alternatively (or perhaps, additionally) I wonder if there is any benefit in conveying changes to a pixel’s brightness / colour from camera to display not in strict sequential order but out of sequence, perhaps in the order of which pixel has experienced the most significant change. One could imagine a transmission path carrying a series of digital messages from camera to display as follows:

change pixel at coordinate (230,680) to RGB value (140,36,80)

change pixel at coordinate (1348,12) to RGB value (90,110,67)

change pixel at coordinate (16,720) to RGB value (140,36,80)

:

Etc.

Again, we have got away from the idea of a strict raster scan and the concept of a frame-rate.

I’d be fascinated to know if any research groups out there are looking at this. My Google searches so far have drawn a blank. Hence if you know of anyone looking at this, I’d greatly appreciate it if you’d let me know by Emailing me at peter@ripcorddesigns.com.